Snowflake SnowPro Advanced: Data Engineer - Practice Exam 2

The SnowPro Advanced: Data Engineer credential recognizes specific knowledge and skills used to apply comprehensive data engineering principles using Snowflake. Passing this Certification showcases your ability to source and transform data from Data Lakes and APIs, design end-to-end near-time streams, and evaluate performance metrics, among other things. 2 + years of hands-on Snowflake Practitioner experience in a Data Engineering role prior is recommended for attempting this exam.

PDF Exam Questions

Q1

Is it a good idea to use a Resource Monitor to control credit usage for Snowpipe?

Q2

Which of the following features incur in data transfer costs?

Q3

Which command can we use to list all the files that have been staged in a named external stage like Amazon S3 without copying them into Snowflake?

Q4

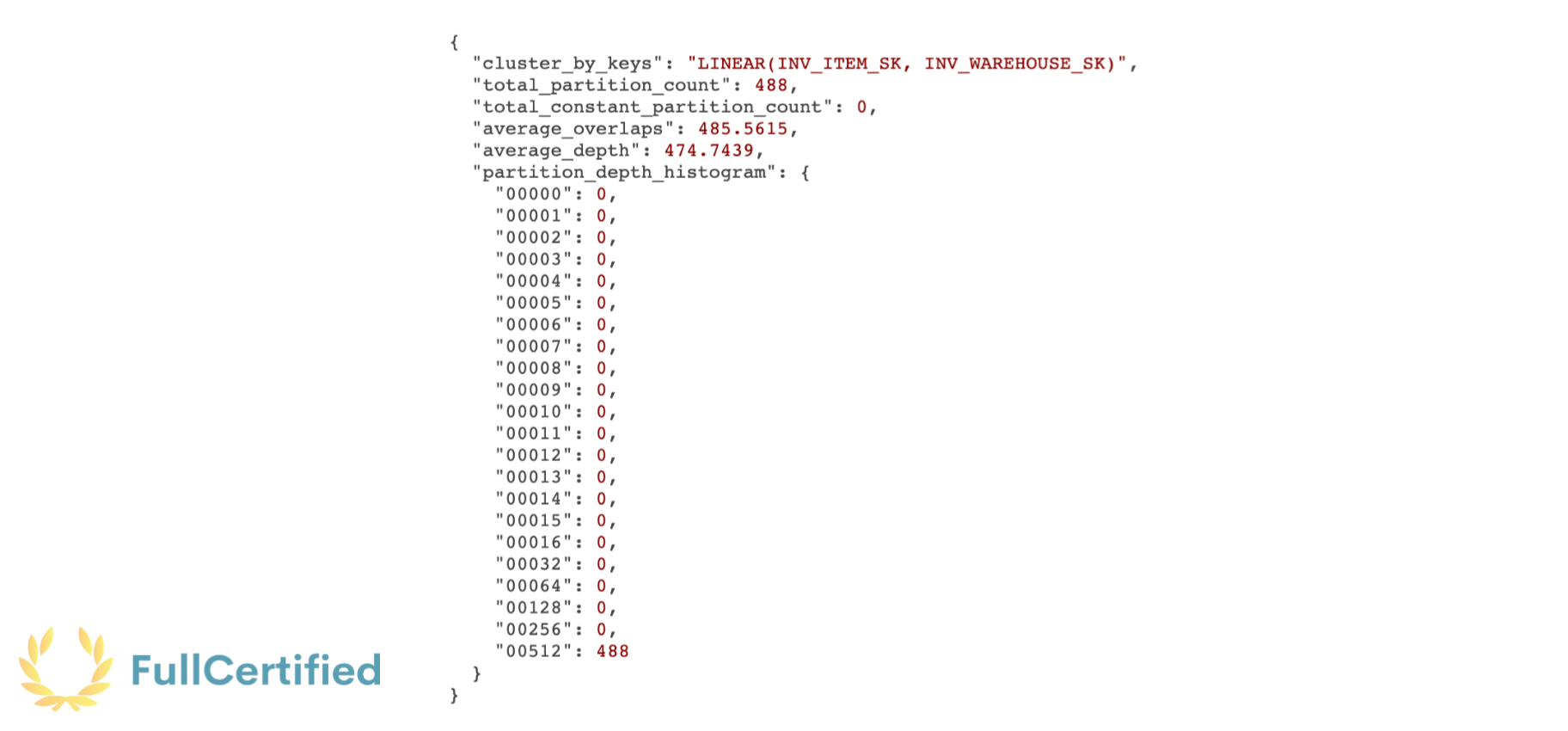

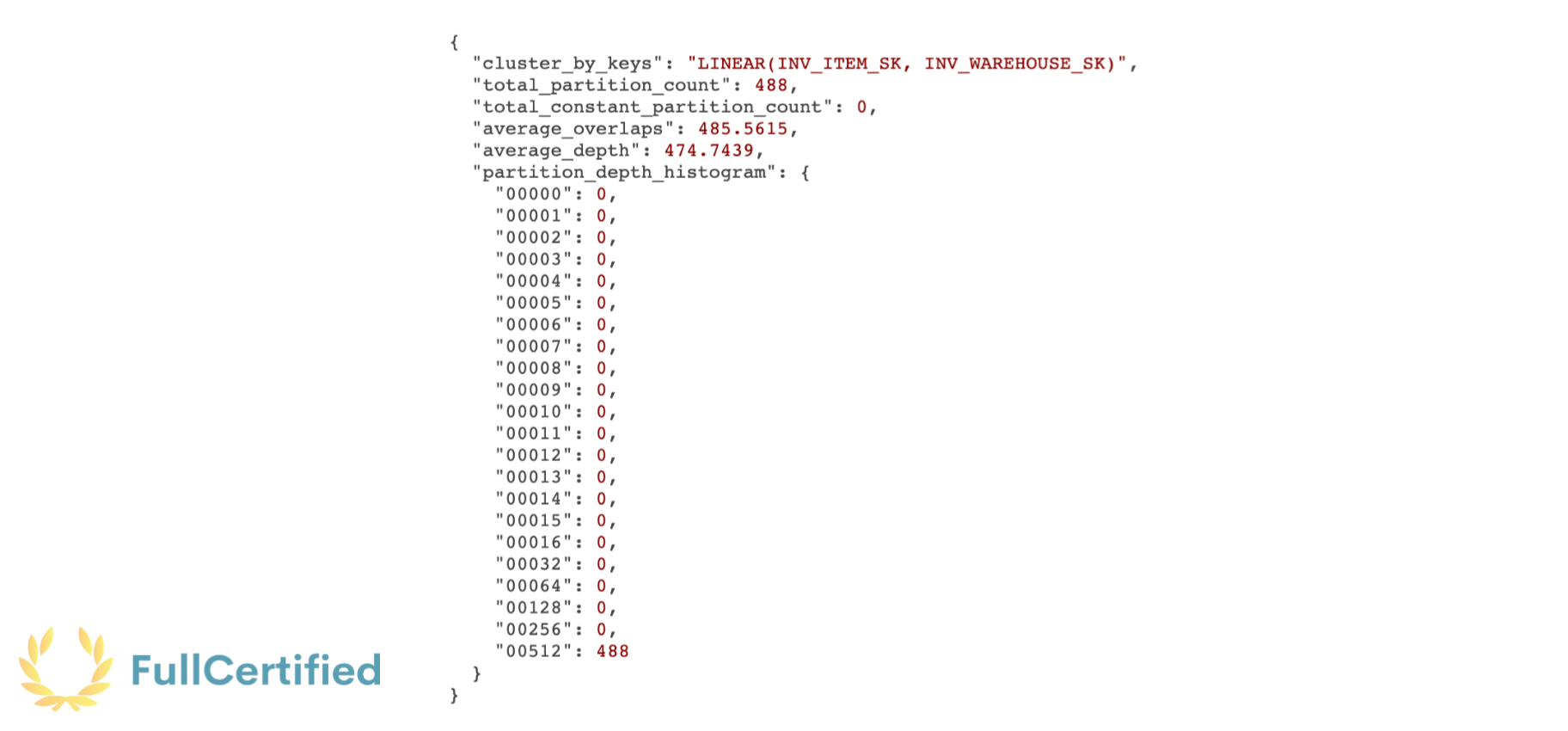

After running the following command

SELECT SYSTEM$CLUSTERING_INFORMATION('INVENTORY', '(INV_ITEM_SK, INV_WAREHOUSE_SK)');Snowflake returns the following information:

How can we check that the Inventory table is not well-clustered?

Q5

Does the Search Optimization Service involve extra storage and computing costs?

Q6

What other parameter does Snowflake recommend adding when organizing files into logical paths?

Q7

If you want your Data Warehouse available with no lag time, is it a good idea to consider disable the auto-suspend functionality?

Q8

Which of these commands require a running warehouse?

Q9

Which of the following JOIN statements are supported by Snowflake?

Q10

Which command can we use to check the time zone in which your Snowflake account is running?

Q11

After creating the table MY_TABLE, we execute the following commands:

CREATE STREAM MYSTREAM ON TABLE MYTABLE;

INSERT INTO MYTABLE VALUES (15);What will be the output of executing the following command?

SELECT SYSTEM$STREAM_HAS_DATA('MYSTREAM');Q12

How can we avoid exposing sequence-generated column values in UDFs?

Q13

What information does an API Integration object store?

Q14

Our company wants to execute a complicated ETL process inside Snowflake every day on the new data that is coming. What is the best way to automate it?

Q15

Which is generally the slowest option when using the COPY INTO command?

Q16

What types of services created and managed by the Cloud Providers does an event-driven pipeline in Snowflake depend on?

Q17

Does Snowflake increase the stream’s offset data retention period if it’s less than 90 days?

Q18

In which locations can you define the file format settings for your staged data?

Q19

Does Snowpipe use file-loading metadata associated with each pipe object to prevent reloading and duplicating the same files?

Q20

Should Data Integration Tech Partner solutions be used to load the data, whereas Snowflake should be used to deliver data to stages?

Q21

Is there any direct charge for using the Kafka connector?

Q22

When does a stream become stale?

Q23

What is the purpose of the “MINS_TO_BYPASS_MFA” command?

Q24

Are Scoped Transactions treated as nested transactions?

Q25

After how many days does the Snowflake data loading history (that can be checked using the COPY_HISTORY command) of the COPY INTO command and Snowpipe expire?

Q26

Are floating point data types and collations supported by the Search Optimization Service?

Q27

What two options can we specify in the FILE_FORMAT attribute from the COPY INTO command?

Q28

What are the supported values for the VALIDATION_MODE parameter while using the COPY INTO <TABLE> command?

Q29

What are some of the advantages when removing loaded data files from the stages?

Q30

After executing the following CTE (Common Table Expression), you get the following error:

WITH MY_TABLE AS (

SELECT $start_date AS MY_DATE

UNION ALL

SELECT DATEADD(DAY, 1, MY_DATE) FROM MY_TABLE WHERE MY_DATE < $end_date

)

SELECT * FROM MY_TABLE;"Recursion exceeded max iteration count (100)"

How can you solve it?

Q31

Imagine we have a table called “VISITOR” with the following data:

Which Snowflake function will allow us to return a concatenated list of countries in which each person have been?

Q32

In which of the following options is it optional to add the column names when returning the clustering information with the SYSTEM$CLUSTERING_INFORMATION and SYSTEM$CLUSTERING_DEPTH commands?

Q33

Which are the benefits of the Search Optimization Service?

Q34

Is an existing Clustering Key supported when a table is created using the CREATE TABLE… AS SELECT command?

Q35

After querying a timezone column, where we supposedly had EST timezone data, we can see that the data has a “Z“at the end. How can this be possible?

Q36

Which of the following statements are possible ways to reduce the risk of SQL injection attacks while using Stored Procedures?

Q37

How many files can the COPY INTO operation load as the maximum when providing a discrete list of files?

Q38

When should we use the matchedClause in Snowflake?

Q39

Can data be inserted multiple times, causing duplicated records, if you run multiple instances of the Kafka connector listening to the same topics?

Q40

Can the same column be specified simultaneously in a masking policy signature and a row access policy signature?

Q41

Can functions used in the definition of a materialized view be non-deterministic?

Q42

Which of the following statements are correct about the TASK_HISTORY function?

Q43

Which two commands can we use to refresh the metadata of an external table hosted on the AWS Cloud Storage?

Q44

A Snowflake developer has created a masking policy with the following syntax:

create or replace masking policy mp

AS (val string) returns string ->

CASE

WHEN current_role() in('DEVROLE')

THEN val

ELSE '*********'

END;Which statements are correct about this policy?

Q45

What does the “SECURE” optional parameter indicate when creating an external function?

Q46

Which statement is false about the following task?

CREATE TASK mytask1

WAREHOUSE = mywh

SCHEDULE = '5 minute'

WHEN

SYSTEM$STREAM_HAS_DATA('ST1')

AS

INSERT INTO mytable(id,nm)

SELECT id, nm

FROM st1

WHERE

METADATA$ACTION='INSERT';Q47

Which of the following Spark DataFrames functions does the Snowflake connector support?

Q48

Which of the following statements is true about API Integration objects?

Q49

Which are the possible Supported Query Types that the ADD SEARCH OPTIMIZATION function accepts using the SEARCH_METHOD parameter?

Q50

Which of the following sentences are correct about the Snowflake Connector for Python?

Q51

How can we check the status of an asynchronous query using the Python Connector?

Q52

When should a Data Engineer enable the “STRIP_OUTER_ARRAY” file format option in the COPY INTO command?

Q53

What data will return the Snowflake after running the following command on the NOT-clustered INVENTORY table?

SELECT SYSTEM$CLUSTERING_INFORMATION("INVENTORY")Q54

When should we use the notMatchedClause in Snowflake?

Q55

When creating a file format, which file format should we specify when using the "STRIP_OUTER_ARRAY = TRUE | FALSE" parameter?

Q56

How does Snowflake prevent data duplication when a COPY statement is executed?

Q57

Can natural JOINs be combined with “ON” clauses?

Q58

A table has been created using the following syntax:

CREATE OR REPLACE TABLE MYTABLE(

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP(),

age NUMBER

);After performing a COPY operation, all the results have the same timestamp in the “created_at” column. Why is this happening?

Q59

Which of the following options is not a compression technique for AVRO file formats?

Q60

After adding the Search Optimization service in a Snowflake table called MY_TABLE, we forgot to add it in two columns, so we ran the same command in the other two columns, as we can see in the following code:

ALTER TABLE MY_TABLE ADD SEARCH OPTIMIZATION ON EQUALITY(col1, col2);

ALTER TABLE MY_TABLE ADD SEARCH OPTIMIZATION ON EQUALITY(col3, col4);What is going to be the result?

Q61

What types of tables are good candidates for implementing search optimization?

Q62

Which statements are true about the Snowflake Spark connector's internal and external transfer modes?

Q63

One query takes a lot of time, and you see in the query profiler the following information:

What might be the cause of this?

Q64

Which of the following options is a good practice if we need to recreate a pipe (CREATE OR REPLACE PIPE to modify most pipe properties) that automates data loads using event notifications?

Q65

We tried to COPY INTO Snowflake several thousands of files. However, it didn’t load all of them. What is more likely to be the cause?